Search

seoKeywordResearchTool

@websrai

@jsxImportSource https://esm.sh/react@18.2.0

HTTP

const AI_PROVIDERS = [

"OpenAI",

"Anthropic",

"Groq",

"Google AI",

nighthawks

@yawnxyz

This is nighthawks an experimental NPC character generator that remembers details about conversations.

Import this into other workflows for now; a UI is coming soon!

Script

// model: 'mixtral-8x7b-32768',

// model: 'llama3-8b-8192',

// provider: 'anthropic',

// model: 'claude-3-haiku-20240307',

system: [

provider: 'groq',

model: 'mixtral-8x7b-32768',

// provider: 'anthropic',

// model: 'claude-3-opus-20240229',

// model: 'claude-3-sonnet-20240229',

npmExample

@taowen

An interactive, runnable TypeScript val by taowen

Script

export let npmExample = (async () => {

const { HUMAN_PROMPT, AI_PROMPT, Client } = await import(

"npm:@anthropic-ai/sdk"

); // The Lodash library exported as ES modules.

const client = new Client(

sonnet

@manyone

Anthropic Claude Claude 3.5 Sonnet

Script

# Anthropic Claude

Claude 3.5 Sonnet

import Anthropic from "npm:@anthropic-ai/sdk";

export default async function generatePoem() {

try {

// Retrieve the Anthropic API key from environment variables

const apiKey = Deno.env.get("ANTHROPIC_API_KEY");

// Check if API key is present

if (!apiKey) {

throw new Error("Anthropic API key is missing. Please set the ANTHROPIC_API_KEY environment variable.");

// Create Anthropic instance with the API key

const anthropic = new Anthropic({

apiKey: apiKey,

console.log("Anthropic API Key Status: Present ✅");

console.log("Attempting to create message...");

const msg = await anthropic.messages.create({

model: "claude-3-5-sonnet-20240620",

insightfulSalmonRabbit

@ubixsnow

@jsxImportSource https://esm.sh/react

HTTP

<p className="text-xs sm:text-sm text-gray-600 dark:text-gray-400">https://arxiv.org/abs/2411.07279</p>

<p className="text-xs sm:text-sm text-gray-600 dark:text-gray-400">

https://www.anthropic.com/news/github-copilot

</p>

<p className="text-xs sm:text-sm text-gray-600 dark:text-gray-400">

runAgent

@jacoblee93

An interactive, runnable TypeScript val by jacoblee93

Script

const { ChatOpenAI } = await import("npm:langchain/chat_models/openai");

const { ChatAnthropic } = await import("npm:langchain/chat_models/anthropic");

const { DynamicTool, Tool, SerpAPI } = await import("npm:langchain/tools");

maxTokens: 2048,

const anthropicModel = new ChatAnthropic({

modelName: "claude-v1",

anthropicApiKey: process.env.ANTHROPIC_API_KEY,

temperature: 0,

const prExtractionChain = new LLMChain({

llm: anthropicModel,

prompt: ChatPromptTemplate.fromPromptMessages([

VALLE

@davitchanturia

VALL-E LLM code generation for vals! Make apps with a frontend, backend, and database. It's a bit of work to get this running, but it's worth it. Fork this val to your own profile. Make a folder for the temporary vals that get generated, take the ID from the URL, and put it in tempValsParentFolderId . If you want to use OpenAI models you need to set the OPENAI_API_KEY env var . If you want to use Anthropic models you need to set the ANTHROPIC_API_KEY env var . Create a Val Town API token , open the browser preview of this val, and use the API token as the password to log in.

HTTP

* If you want to use OpenAI models you need to set the `OPENAI_API_KEY` [env var](https://www.val.town/settings/environment-variables).

* If you want to use Anthropic models you need to set the `ANTHROPIC_API_KEY` [env var](https://www.val.town/settings/environment-variables).

* Create a [Val Town API token](https://www.val.town/settings/api), open the browser preview of this val, and use the API token as the password to log in.

VALLE

@ubixsnow

VALL-E LLM code generation for vals! Make apps with a frontend, backend, and database. It's a bit of work to get this running, but it's worth it. Fork this val to your own profile. Make a folder for the temporary vals that get generated, take the ID from the URL, and put it in tempValsParentFolderId . If you want to use OpenAI models you need to set the OPENAI_API_KEY env var . If you want to use Anthropic models you need to set the ANTHROPIC_API_KEY env var . Create a Val Town API token , open the browser preview of this val, and use the API token as the password to log in.

HTTP

* If you want to use OpenAI models you need to set the `OPENAI_API_KEY` [env var](https://www.val.town/settings/environment-variables).

* If you want to use Anthropic models you need to set the `ANTHROPIC_API_KEY` [env var](https://www.val.town/settings/environment-variables).

* Create a [Val Town API token](https://www.val.town/settings/api), open the browser preview of this val, and use the API token as the password to log in.

claudeForwarder

@prabhanshu

// This val implements a function to call the Claude API using fetch.

HTTP

async function callClaudeApi(systemPrompt: string, userPrompt: string, apiKey: string): Promise<string> {

const url = "https://api.anthropic.com/v1/messages";

const headers = {

"x-api-key": apiKey,

"anthropic-version": "2023-06-01",

"anthropic-beta": "prompt-caching-2024-07-31",

const data = {

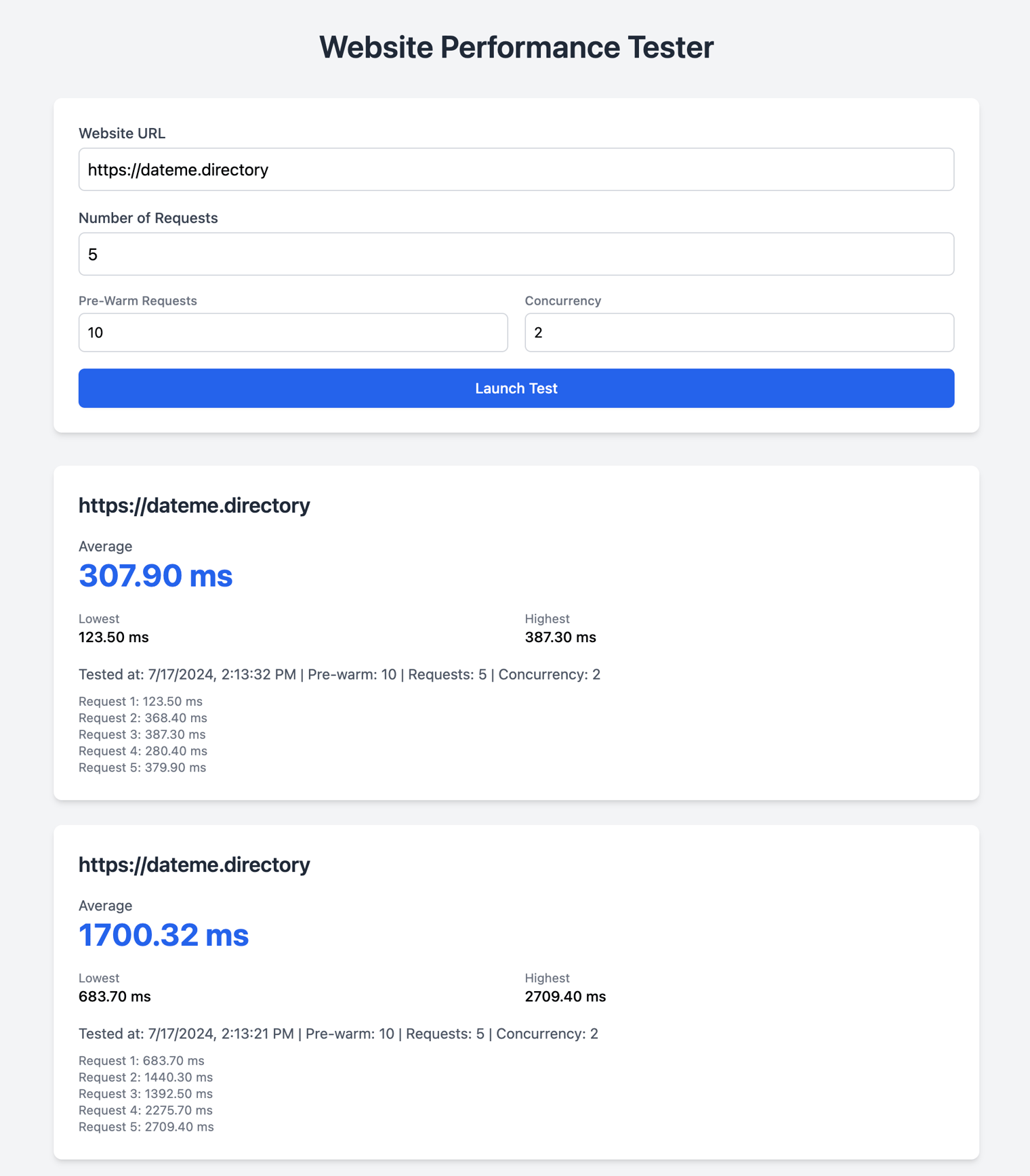

perf

@stevekrouse

Perf - a website performance tester I had Anthropic build this for me to show off the launch of HTTP (Preview) vals: https://blog.val.town/blog/http-preview/

HTTP

# Perf - a website performance tester

I had Anthropic build this for me to show off the launch of `HTTP (Preview)` vals: https://blog.val.town/blog/http-preview/

VALLE

@tmcw

VALL-E LLM code generation for vals! Make apps with a frontend, backend, and database. It's a bit of work to get this running, but it's worth it. Fork this val to your own profile. Make a folder for the temporary vals that get generated, take the ID from the URL, and put it in tempValsParentFolderId . If you want to use OpenAI models you need to set the OPENAI_API_KEY env var . If you want to use Anthropic models you need to set the ANTHROPIC_API_KEY env var . Create a Val Town API token , open the browser preview of this val, and use the API token as the password to log in.

HTTP

* If you want to use OpenAI models you need to set the `OPENAI_API_KEY` [env var](https://www.val.town/settings/environment-variables).

* If you want to use Anthropic models you need to set the `ANTHROPIC_API_KEY` [env var](https://www.val.town/settings/environment-variables).

* Create a [Val Town API token](https://www.val.town/settings/api), open the browser preview of this val, and use the API token as the password to log in.

VALLE

@jxnblk

VALL-E LLM code generation for vals! Make apps with a frontend, backend, and database. It's a bit of work to get this running, but it's worth it. Fork this val to your own profile. Make a folder for the temporary vals that get generated, take the ID from the URL, and put it in tempValsParentFolderId . If you want to use OpenAI models you need to set the OPENAI_API_KEY env var . If you want to use Anthropic models you need to set the ANTHROPIC_API_KEY env var . Create a Val Town API token , open the browser preview of this val, and use the API token as the password to log in.

HTTP

* If you want to use OpenAI models you need to set the `OPENAI_API_KEY` [env var](https://www.val.town/settings/environment-variables).

* If you want to use Anthropic models you need to set the `ANTHROPIC_API_KEY` [env var](https://www.val.town/settings/environment-variables).

* Create a [Val Town API token](https://www.val.town/settings/api), open the browser preview of this val, and use the API token as the password to log in.

VALLErun

@tmcw

The actual code for VALL-E: https://www.val.town/v/janpaul123/VALLE

HTTP

import { sleep } from "https://esm.town/v/stevekrouse/sleep?v=1";

import { anthropic } from "npm:@ai-sdk/anthropic";

import { openai } from "npm:@ai-sdk/openai";

} else {

vercelModel = anthropic(model);

// Anthropic doesn't support system messages not at the very start.

const systemRole = model.startsWith("gpt") ? "system" : "user";

maxTokens: 8192,

headers: { "anthropic-beta": "max-tokens-3-5-sonnet-2024-07-15" },

let messages = [

VALLE

@tgrv

VALL-E LLM code generation for vals! Make apps with a frontend, backend, and database. It's a bit of work to get this running, but it's worth it. Fork this val to your own profile. Make a folder for the temporary vals that get generated, take the ID from the URL, and put it in tempValsParentFolderId . If you want to use OpenAI models you need to set the OPENAI_API_KEY env var . If you want to use Anthropic models you need to set the ANTHROPIC_API_KEY env var . Create a Val Town API token , open the browser preview of this val, and use the API token as the password to log in.

HTTP

* If you want to use OpenAI models you need to set the `OPENAI_API_KEY` [env var](https://www.val.town/settings/environment-variables).

* If you want to use Anthropic models you need to set the `ANTHROPIC_API_KEY` [env var](https://www.val.town/settings/environment-variables).

* Create a [Val Town API token](https://www.val.town/settings/api), open the browser preview of this val, and use the API token as the password to log in.

watchAnthropicCircuitsBlog

@jsomers

An interactive, runnable TypeScript val by jsomers

Cron

export const watchWebsite = async () => {

const url = "https://transformer-circuits.pub";

const newHtml = await fetch(url).then(r => r.text());

const key = "watch:" + url;

let oldHtml = "";

try {

oldHtml = await blob.get(key).then(r => r.text());

} catch (error) {

console.log("error");

await blob.set(key, newHtml);