Search

emojiInstantSearch

@maxm

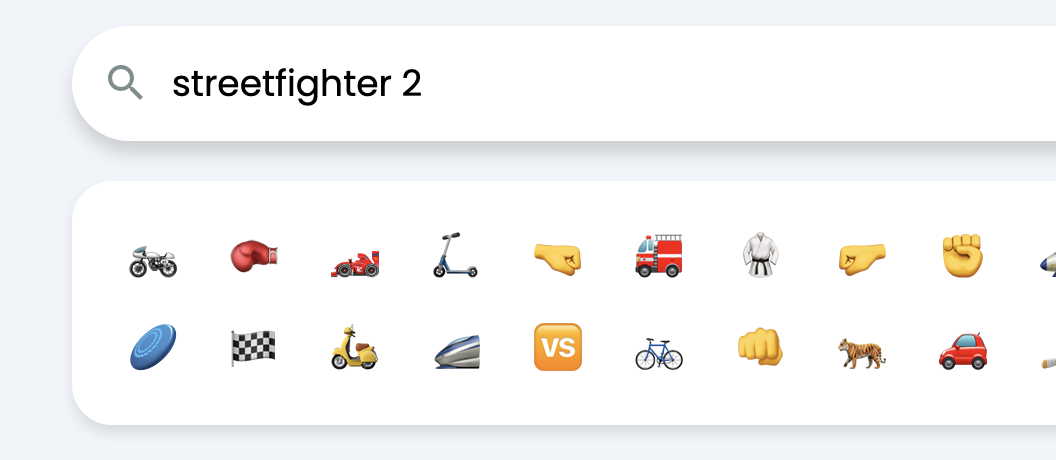

Emoji Instant Search Uses vector embeddings to get "vibes" search on emojis

HTTP

# Emoji Instant Search

Uses vector embeddings to get "vibes" search on emojis

import _ from "npm:lodash";

// https://gist.github.com/maxmcd/b170e57d53cb942642c35b135e2d52f1 (takes a bit of time to load)

import { searchEmojis } from "https://esm.town/v/maxm/emojiVectorEmbeddings";

import { extractValInfo } from "https://esm.town/v/pomdtr/extractValInfo?v=29";

const app = new Hono();

semanticSearchBlobs

@janpaul123

Part of Val Town Semantic Search . Uses Val Town's blob storage to search embeddings of all vals, by downloading them all and iterating through all of them to compute distance. Slow and terrible, but it works! Get metadata from blob storage: allValsBlob${dimensions}EmbeddingsMeta (currently allValsBlob1536EmbeddingsMeta ), which has a list of all indexed vals and where their embedding is stored ( batchDataIndex points to the blob, and valIndex represents the offset within the blob). The blobs have been generated by janpaul123/indexValsBlobs . It is not run automatically. Get all blobs with embeddings pointed to by the metadata, e.g. allValsBlob1536EmbeddingsData_0 for batchDataIndex 0. Call OpenAI to generate an embedding for the search query. Go through all embeddings and compute cosine similarity with the embedding for the search query. Return list sorted by similarity.

Script

Uses Val Town's [blob storage](https://docs.val.town/std/blob/) to search embeddings of all vals, by downloading them all and iterating through all of them to compute distance. Slow and terrible, but it works!

- Get metadata from blob storage: `allValsBlob${dimensions}EmbeddingsMeta` (currently `allValsBlob1536EmbeddingsMeta`), which has a list of all indexed vals and where their embedding is stored (`batchDataIndex` points to the blob, and `valIndex` represents the offset within the blob).

- Get all blobs with embeddings pointed to by the metadata, e.g. `allValsBlob1536EmbeddingsData_0` for `batchDataIndex` 0.

const allValsBlobEmbeddingsMeta = (await blob.getJSON(`allValsBlob${dimensions}EmbeddingsMeta`)) ?? {};

const allBatchDataIndexes = _.uniq(Object.values(allValsBlobEmbeddingsMeta).map((item: any) => item.batchDataIndex));

const embeddingsBatches = [];

const embeddingsBatchBlobName = `allValsBlob${dimensions}EmbeddingsData_${batchDataIndex}`;

const promise = blob.get(embeddingsBatchBlobName).then((response) => response.arrayBuffer());

embeddingsBatches[batchDataIndex as any] = data;

console.log(`Loaded ${embeddingsBatchBlobName} (${data.byteLength} bytes)`);

const queryEmbedding = (await openai.embeddings.create({

for (const id in allValsBlobEmbeddingsMeta) {

const meta = allValsBlobEmbeddingsMeta[id];

conversationalRetrievalQAChainSummaryMemory

@jacoblee93

An interactive, runnable TypeScript val by jacoblee93

Script

"https://esm.sh/langchain/chat_models/openai"

const { OpenAIEmbeddings } = await import(

"https://esm.sh/langchain/embeddings/openai"

const { ConversationSummaryMemory } = await import(

[{ id: 2 }, { id: 1 }, { id: 3 }],

new OpenAIEmbeddings({

openAIApiKey: process.env.OPENAI_API_KEY,

semanticSearchBlogPostPlot

@janpaul123

An interactive, runnable TypeScript val by janpaul123

HTTP

import blogPostEmbeddingsDimensionalityReduction from "https://esm.town/v/janpaul123/blogPostEmbeddingsDimensionalityReduction";

export async function semanticSearchBlogPostPlot() {

const Plot = await import("https://cdn.jsdelivr.net/npm/@observablehq/plot@0.6.14/+esm");

{ parseHTML: p },

) => p(`<a>`));

const points = await blogPostEmbeddingsDimensionalityReduction();

const chart = Plot.plot({

document,

buildclubProjectSearch

@yawnxyz

Use embeddings / Lunr search on Airtable. Embeddings need to have been generated / stored on Airtable, or this gets very slow / costly. Simple usage: https://yawnxyz-buildclubprojectsearch.web.val.run/search?query=cars Full GET request: https://yawnxyz-buildclubprojectsearch.web.val.run/search?query=your+search+query&similarity_threshold=0.8&max_results=5&base_id=your_base_id&table_name=your_table_name&content_column=your_content_column&embedding_column=your_embedding_column

HTTP

Use embeddings / Lunr search on Airtable. Embeddings need to have been generated / stored on Airtable, or this gets very slow / costly.

- Simple usage: https://yawnxyz-buildclubprojectsearch.web.val.run/search?query=cars

<th>Content</th>

<th>embeddingsContent</th>

</tr>

<td>{result.content}</td>

<td>{result.embeddingsContent}</td>

</tr>

const defaultContentColumn = "Content";

const defaultEmbeddingColumn = "Embeddings";

async function fetchAirtableData(baseId, tableName, nameColumn, contentColumn, embeddingColumn) {

embedding: record.get(embeddingColumn).split(",").map(parseFloat),

embeddingsContent: record.get('EmbeddingsContent'),

return documents;

console.log('documents:', documents)

await semanticSearch.addDocuments({documents, fields: 'embeddingsContent'});

const results = await semanticSearch.search({query, similarityThreshold});

semanticSearchTurso

@janpaul123

Part of Val Town Semantic Search . Uses Turso to search embeddings of all vals, using the sqlite-vss extension. Call OpenAI to generate an embedding for the search query. Query the vss_vals_embeddings table in Turso using vss_search . The vss_vals_embeddings table has been generated by janpaul123/indexValsTurso . It is not run automatically. This table is incomplete due to a bug in Turso .

Script

*Part of [Val Town Semantic Search](https://www.val.town/v/janpaul123/valtownsemanticsearch).*

Uses [Turso](https://turso.tech/) to search embeddings of all vals, using the [sqlite-vss](https://github.com/asg017/sqlite-vss) extension.

- Call OpenAI to generate an embedding for the search query.

- Query the `vss_vals_embeddings` table in Turso using `vss_search`.

- The `vss_vals_embeddings` table has been generated by [janpaul123/indexValsTurso](https://www.val.town/v/janpaul123/indexValsTurso). It is not run automatically.

- This table is incomplete due to a [bug in Turso](https://discord.com/channels/933071162680958986/1245378515679973420/1245378515679973420).

const sqlite = createClient({

url: "libsql://valsembeddings-jpvaltown.turso.io",

authToken: Deno.env.get("TURSO_AUTH_TOKEN_VALSEMBEDDINGS"),

const openai = new OpenAI();

const embedding = await openai.embeddings.create({

model: "text-embedding-3-small",

sql:

"WITH matches AS (SELECT rowid, distance FROM vss_vals_embeddings WHERE vss_search(embedding, :embeddingBinary) LIMIT 50) SELECT id, distance FROM matches JOIN vals_embeddings ON matches.rowid = vals_embeddings.rowid",

args: { embeddingBinary },

getContentFromUrl

@yawnxyz

getContentFromUrl Use this for summarizers.

Combines https://r.jina.ai/URL and markdown.download's Youtube transcription getter to do its best to retrieve content from URLs. https://arstechnica.com/space/2024/06/nasa-indefinitely-delays-return-of-starliner-to-review-propulsion-data

https://journals.asm.org/doi/10.1128/iai.00065-23 Usage: https://yawnxyz-getcontentfromurl.web.val.run/https://www.ncbi.nlm.nih.gov/pmc/articles/PMC10187409/ https://yawnxyz-getcontentfromurl.web.val.run/https://www.youtube.com/watch?v=gzsczZnS84Y&ab_channel=PhageDirectory

HTTP

const { url, opts = 'content', crawlMode = 'jina', useCache, getTags, getTagsPrompt, getSummary, getSummaryPrompt, getEmbeddings } = body;

const [summary, tags, embeddings] = await Promise.all([

getEmbeddings ? getEmbeddingsFn(content) : null

return c.json({ summary: summary?.text, tags: tags?.text, content, embeddings });

getSummary = true, getSummaryPrompt, getTags = true, getTagsPrompt, getEmbeddings,

let summary, tags, embeddings

if (getEmbeddings) {

embeddings = await getEmbeddingsFn(content + " " + summary + " " + tags);

embeddings

export const getEmbeddingsFn = async (content) => {

ai

@yawnxyz

An http and class wrapper for Vercel's AI SDK Usage: Groq: https://yawnxyz-ai.web.val.run/generate?prompt="tell me a beer joke"&provider=groq&model=llama3-8b-8192 Perplexity: https://yawnxyz-ai.web.val.run/generate?prompt="what's the latest phage directory capsid & tail article about?"&provider=perplexity Mistral: https://yawnxyz-ai.web.val.run/generate?prompt="tell me a joke?"&provider=mistral&model="mistral-small-latest" async function calculateEmbeddings(text) {

const url = `https://yawnxyz-ai.web.val.run/generate?embed=true&value=${encodeURIComponent(text)}`;

try {

const response = await fetch(url);

const data = await response.json();

return data;

} catch (error) {

console.error('Error calculating embeddings:', error);

return null;

}

}

HTTP

- Mistral: `https://yawnxyz-ai.web.val.run/generate?prompt="tell me a joke?"&provider=mistral&model="mistral-small-latest"`

async function calculateEmbeddings(text) {

const url = `https://yawnxyz-ai.web.val.run/generate?embed=true&value=${encodeURIComponent(text)}`;

} catch (error) {

console.error('Error calculating embeddings:', error);

return null;

conversationalRetrievalQAChainStreamingExample

@jacoblee93

An interactive, runnable TypeScript val by jacoblee93

Script

"https://esm.sh/langchain/chat_models/openai"

const { OpenAIEmbeddings } = await import(

"https://esm.sh/langchain/embeddings/openai"

const { BufferMemory } = await import("https://esm.sh/langchain/memory");

[{ id: 2 }, { id: 1 }, { id: 3 }],

new OpenAIEmbeddings({

openAIApiKey: process.env.OPENAI_API_KEY,

retrieverSampleSelfQuery

@webup

An interactive, runnable TypeScript val by webup

Script

description: "The length of the movie in minutes",

type: "number",

* Next, we instantiate a vector store. This is where we store the embeddings of the documents.

* We also need to provide an embeddings object. This is used to embed the documents.

const modelBuilder = await getModelBuilder();

const llm = await modelBuilder();

blobby

@yawnxyz

Blobby Blobby is a simple wrapper around blob w/ more helpers for scoping, uploading/downloading, writing and reading strings, and so on. Todo Support lunr / semantic search, and embeddings Collections that support pointing to multiple blobs, like {description, embeddings, fileblob, ...} with a shared index / lookup

Script

Todo

- Support lunr / semantic search, and embeddings

- Collections that support pointing to multiple blobs, like {description, embeddings, fileblob, ...} with a shared index / lookup

askLexi

@thomasatflexos

An interactive, runnable TypeScript val by thomasatflexos

Script

const { SupabaseVectorStore } = await import("npm:langchain/vectorstores");

const { ChatOpenAI } = await import("npm:langchain/chat_models");

const { OpenAIEmbeddings } = await import("npm:langchain/embeddings");

const { createClient } = await import(

"https://esm.sh/@supabase/supabase-js@2"

handleLLMNewToken(token) {

const vectorStore = await SupabaseVectorStore.fromExistingIndex(

new OpenAIEmbeddings({

openAIApiKey: process.env.OPEN_API_KEY,

client,

bronzeOrangutan

@janpaul123

Uses Val Town's blob storage to search embeddings of all vals, by downloading them all and iterating through all of them to compute distance. Slow and terrible, but it works!

Script

Uses Val Town's [blob storage](https://docs.val.town/std/blob/) to search embeddings of all vals, by downloading them all and iterating through all of them to compute distance. Slow and terrible, but it works!

valtownsemanticsearch

@janpaul123

😎 VAL VIBES: Val Town Semantic Search This val hosts an HTTP server that lets you search all vals based on vibes. If you search for "discord bot" it shows all vals that have "discord bot" vibes. It does this by comparing embeddings from OpenAI generated for the code of all public vals, to an embedding of your search query. This is an experiment to see if and how we want to incorporate semantic search in the actual Val Town search page. I implemented three backends, which you can switch between in the search UI. Check out these vals for details on their implementation. Neon: storing and searching embeddings using the pg_vector extension in Neon's Postgres database. Searching: janpaul123/semanticSearchNeon Indexing: janpaul123/indexValsNeon Blobs: storing embeddings in Val Town's standard blob storage , and iterating through all of them to compute distance. Slow and terrible, but it works! Searching: janpaul123/semanticSearchBlobs Indexing: janpaul123/indexValsBlobs Turso: storing and searching using the sqlite-vss extension. Abandoned because of a bug in Turso's implementation. Searching: janpaul123/semanticSearchTurso Indexing: janpaul123/indexValsTurso All implementations use the database of public vals , made by Achille Lacoin , which is refreshed every hour. The Neon implementation updates every 10 minutes, and the other ones are not updated. I also forked Achille's search UI for this val. Please share any feedback and suggestions, and feel free to fork our vals to improve them. This is a playground for semantic search before we implement it in the product for real!

HTTP

This val hosts an [HTTP server](https://janpaul123-valtownsemanticsearch.web.val.run/) that lets you search all vals based on vibes. If you search for "discord bot" it shows all vals that have "discord bot" vibes.

It does this by comparing [embeddings from OpenAI](https://platform.openai.com/docs/guides/embeddings) generated for the code of all public vals, to an embedding of your search query.

This is an experiment to see if and how we want to incorporate semantic search in the actual Val Town search page.

I implemented three backends, which you can switch between in the search UI. Check out these vals for details on their implementation.

- **Neon:** storing and searching embeddings using the [pg_vector](https://neon.tech/docs/extensions/pgvector) extension in Neon's Postgres database.

- Searching: [janpaul123/semanticSearchNeon](https://www.val.town/v/janpaul123/semanticSearchNeon)

- Indexing: [janpaul123/indexValsNeon](https://www.val.town/v/janpaul123/indexValsNeon)

- **Blobs:** storing embeddings in Val Town's [standard blob storage](https://docs.val.town/std/blob/), and iterating through all of them to compute distance. Slow and terrible, but it works!

- Searching: [janpaul123/semanticSearchBlobs](https://www.val.town/v/janpaul123/semanticSearchBlobs)

conversationalQAChainEx

@jacoblee93

An interactive, runnable TypeScript val by jacoblee93

Script

"https://esm.sh/langchain/vectorstores/hnswlib"

const { OpenAIEmbeddings } = await import(

"https://esm.sh/langchain/embeddings/openai"

const { ConversationalRetrievalQAChain } = await import(

[{ id: 2 }, { id: 1 }, { id: 3 }, { id: 4 }, { id: 5 }],

new OpenAIEmbeddings({

openAIApiKey: process.env.OPENAI_API_KEY,