Search

Book_Recommendation_System

@Rogueox

@jsxImportSource https://esm.sh/react@18.2.0

HTTP

// Dynamic AI-powered book recommendations

const { OpenAI } = await import("https://esm.town/v/std/openai");

const openai = new OpenAI();

const completion = await openai.chat.completions.create({

messages: [

// Log the raw response for debugging

console.log('Raw OpenAI Response:', completion.choices[0].message.content);

// Attempt to parse the response, with fallback

umap

@ejfox

UMAP Dimensionality Reduction API This is a high-performance dimensionality reduction microservice using UMAP (Uniform Manifold Approximation and Projection). It provides an efficient way to reduce high-dimensional data to 2D or 3D representations, making it easier to visualize and analyze complex datasets. When to Use This Service Visualizing high-dimensional data in 2D or 3D space Reducing dimensionality of large datasets for machine learning tasks Exploring relationships and clusters in complex data Preprocessing step for other machine learning algorithms Common Use Cases Visualizing word embeddings in a scatterplotcs Exploring customer segmentation in marketing analytics Visualizing image embeddings in computer vision tasks

HTTP

<div class="example">

<h3>Example with OpenAI Embeddings:</h3>

<p>This example shows how to use the UMAP service with OpenAI embeddings:</p>

<pre>

// First, generate embeddings using OpenAI API

import { OpenAI } from "https://esm.town/v/std/openai";

const openai = new OpenAI();

async function getEmbeddings(texts) {

const response = await openai.embeddings.create({

model: "text-embedding-ada-002",

// Then, use these embeddings with the UMAP service

const texts = ["Hello world", "OpenAI is amazing", "UMAP reduces dimensions"];

const embeddings = await getEmbeddings(texts);

chatGPTPlugin

@stevekrouse

ChatGPT Plugin for Val Town Run code on Val Town from ChatGPT. Usage I haven't been able to get it to do very useful things yet. It certainly can evaluate simple JS code: It would be awesome if it knew how to use other APIs and make fetch calls to them, but it has been failing at that . Limitations This plugin currently only has unauthenticated access to POST /v1/eval, which basically means that all it can do is evaluate JavaScript or TypeScript. In theory it could refer to any existing vals in Val Town, but it wouldn't know about those unless you told it. Future directions Once we have more robust APIs to search for existing vals, this plugin could be WAY more valuable! In theory GPT4 could first search for vals to do a certain task and then if it finds one it could then write code based on that val. In practice however, that might require too many steps for poor GPT. We might need to use some sort of agent or langchain thing if we wanted that sort of behavior. Adding authentication could also enable it to make requests using your secrets and private vals and create new vals for you. However I am dubious that this would actually be practically useful. Installation Select GPT-4 (requires ChatGPT Plus) Click No plugins enabled Click "Install an unverified plugin" or "Develop your own plugin" (I'm not sure the difference) Paste in this val's express endpoint https://stevekrouse-chatGPTPlugin.express.val.run Click through the prompts until it's installed

Express (deprecated)

It would be awesome if it knew how to use other APIs and make `fetch` calls to them, but it has been [failing at that](https://chat.openai.com/share/428183eb-8e6d-4008-b295-f3b0ef2bedd2).

## Limitations

import { fetchJSON } from "https://esm.town/v/stevekrouse/fetchJSON";

import { openaiOpenAPI } from "https://esm.town/v/stevekrouse/openaiOpenAPI";

// https://stevekrouse-chatgptplugin.express.val.run/.well-known/ai-plugin.json

// only POST /v1/eval for now

res.send(openaiOpenAPI);

else if (req.path === "/v1/eval") {

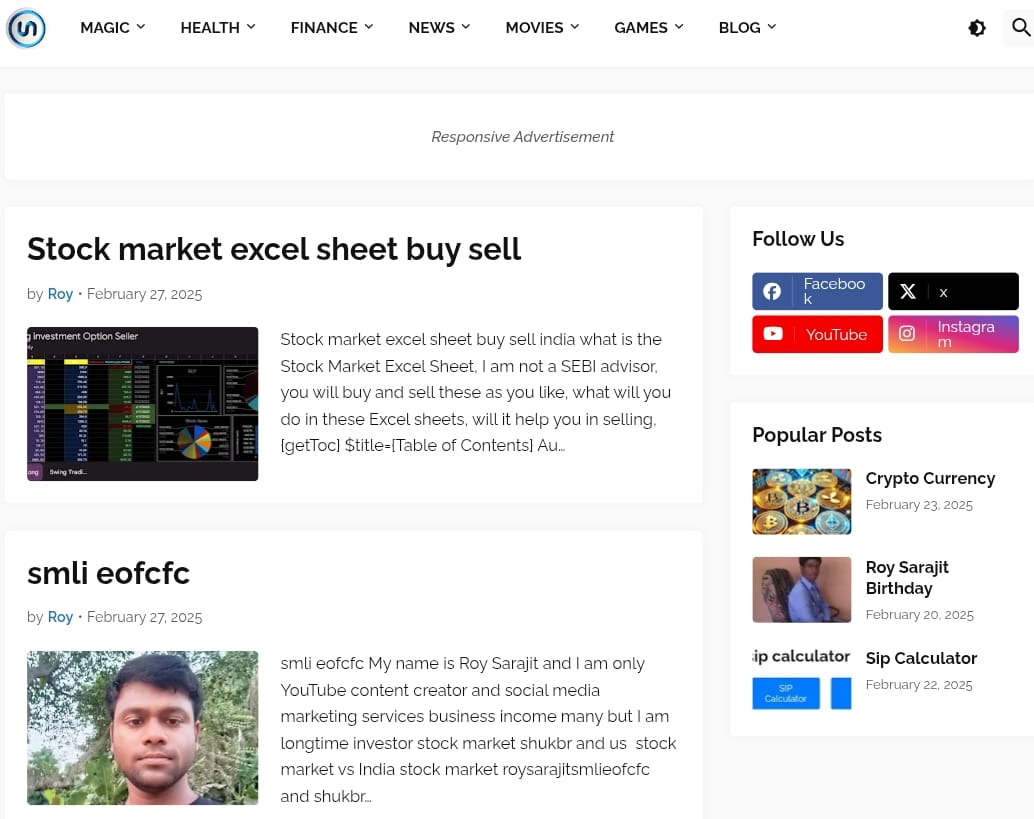

photoEditingAIApp

@roysarajit143

@jsxImportSource https://esm.sh/react@18.2.0

HTTP

const { blob } = await import("https://esm.town/v/std/blob");

const { OpenAI } = await import("https://esm.town/v/std/openai");

const openai = new OpenAI();

if (request.method === 'POST') {

const { prompt } = await request.json();

const response = await openai.images.generate({

model: "dall-e-3",

weatherGPT

@abar04

Cron

import { email } from "https://esm.town/v/std/email?v=11";

import { OpenAI } from "npm:openai";

let location = "Perth WA";

).then(r => r.json());

const openai = new OpenAI();

let chatCompletion = await openai.chat.completions.create({

messages: [{

tenaciousPeachHornet

@Argu

p @jsxImportSource https://esm.sh/react@18.2.0

HTTP

try {

const { OpenAI } = await import("https://esm.town/v/std/openai");

const openai = new OpenAI();

const completion = await openai.chat.completions.create({

messages: messages,

VALLE

@peterzakin

Fork it and authenticate with your Val Town API token as the password. Needs an OPENAI_API_KEY env var to be set, and change the variables under "Set these to your own". https://x.com/JanPaul123/status/1812957150559211918

HTTP

Fork it and authenticate with your Val Town API token as the password. Needs an `OPENAI_API_KEY` env var to be set, and change the variables under "Set these to your own".

https://x.com/JanPaul123/status/1812957150559211918

blah

@davincidreams

An interactive, runnable TypeScript val by davincidreams

HTTP

"description": "A sample blah manifest demonstrating various tool types and configurations.",

"env": {

"OPENAI_API_KEY": Deno.env.get("OPENAI_API_KEY"),

"tools": [

"name": "hello_name",

helpfulFuchsiaAmphibian

@Handlethis

@jsxImportSource https://esm.sh/react

HTTP

// Generate AI audio description

const { OpenAI } = await import("https://esm.town/v/std/openai");

const openai = new OpenAI();

const audioDescCompletion = await openai.chat.completions.create({

messages: [{

aigeneratorblog

@websrai

@jsxImportSource https://esm.sh/react@18.2.0

HTTP

const AI_PROVIDERS = [

"OpenAI",

"Anthropic",

const [adContent, setAdContent] = useState("");

const [selectedProvider, setSelectedProvider] = useState("OpenAI");

const [apiKey, setApiKey] = useState("");

setUser(userData);

setSelectedProvider(userData.aiProvider || "OpenAI");

setApiKey(userData.apiKey || "");

const { sqlite } = await import("https://esm.town/v/stevekrouse/sqlite");

const { OpenAI } = await import("https://esm.town/v/std/openai");

const openai = new OpenAI();

const SCHEMA_VERSION = 2;

VALUES (?, ?, ?, ?, ?)

`, ["Demo User", "FREE", 0, "OpenAI", ""]);

user = (await sqlite.execute(`SELECT * FROM ${KEY}_users_${SCHEMA_VERSION} LIMIT 1`)).rows[0];

headers: { "Content-Type": "application/json" }

// Generate content using OpenAI

const completion = await openai.chat.completions.create({

messages: [

seoKeywordResearchTool

@websrai

@jsxImportSource https://esm.sh/react@18.2.0

HTTP

const AI_PROVIDERS = [

"OpenAI",

"Anthropic",

const [adContent, setAdContent] = useState("");

const [selectedProvider, setSelectedProvider] = useState("OpenAI");

const [apiKey, setApiKey] = useState("");

setUser(userData);

setSelectedProvider(userData.aiProvider || "OpenAI");

setApiKey(userData.apiKey || "");

const { sqlite } = await import("https://esm.town/v/stevekrouse/sqlite");

const { OpenAI } = await import("https://esm.town/v/std/openai");

const openai = new OpenAI();

const SCHEMA_VERSION = 2;

VALUES (?, ?, ?, ?, ?)

`, ["Demo User", "FREE", 0, "OpenAI", ""]);

user = (await sqlite.execute(`SELECT * FROM ${KEY}_users_${SCHEMA_VERSION} LIMIT 1`)).rows[0];

headers: { "Content-Type": "application/json" }

// Generate content using OpenAI

const completion = await openai.chat.completions.create({

messages: [

meme_generator

@ajax

* Check if a string is valid base64

* @param {string} str - String to check

* @returns {boolean} True if valid base64

HTTP

import { openai } from "npm:@ai-sdk/openai";

import { experimental_generateImage as generateImage } from "npm:ai";

* Check if a string is valid base64

try {

imageResult = await generateImage({

model: openai.image("dall-e-3"),

prompt: meme_idea,

} catch (error) {

dailySlackStandup

@dnishiyama

// Depending on the day do one of these:

Cron

import { fetch } from "https://esm.town/v/std/fetch";

import { OpenAI } from "https://esm.town/v/std/openai";

import process from "node:process";

const openai = new OpenAI();

// Depending on the day do one of these:

console.log(prompt);

const completion = await openai.chat.completions.create({

messages: [

motionlessPurpleBat

@carts

An interactive, runnable TypeScript val by carts

HTTP

import { OpenAI } from "https://esm.town/v/std/openai?v=4";

const prompt = "Tell me a dad joke. Format the response as JSON with 'setup' and 'punchline' keys.";

export default async function dailyDadJoke(req: Request): Response {

const openai = new OpenAI();

const resp = await openai.chat.completions.create({

messages: [

GistGPT

@weaverwhale

GistGPT A helpful assistant who provides the gist of a gist How to use / and /gist - Default response is to explain this file. I believe this is effectively real-time recursion ? /gist?url={URL} - Provide a RAW file URL from Github, BitBucket, GitLab, Val Town, etc. and GistGPT will provide you the gist of the code. /about - "Tell me a little bit about yourself"

HTTP

import { Hono } from "npm:hono";

import { OpenAI } from "npm:openai";

const gistGPT = async (input: string, about?: boolean) => {

const chatInput = about ? input : await (await fetch(input)).text();

const openai = new OpenAI();

let chatCompletion = await openai.chat.completions.create({

messages: [